VRAM is King.

Key Takeaways

- The Big Shift: How Agentic AI is changing the game.

- Actionable Insight: Immediate steps to secure your AI Privacy.

- Future Proof: Why Local LLMs are the ultimate privacy shield.

The "VRAM" Bottleneck

Here is the secret Nvidia doesn't want you to know: Compute doesn't matter for LLMs. Memory bandwidth matters.

You can have the fastest chip in the world, but if you can't fit the 70B model into memory, you're dead.

The Math (Why 3090s Win)

A brand new RTX 4090 costs $1,800. It has 24GB VRAM. A used RTX 3090 costs $700. It has 24GB VRAM.

Do the math.

For $1,400 (two used 3090s), you get 48GB of VRAM. That is enough to run Llama-3-70B at 4-bit quantization. A single 4090 cannot do this.

The Hype (RTX 4090)

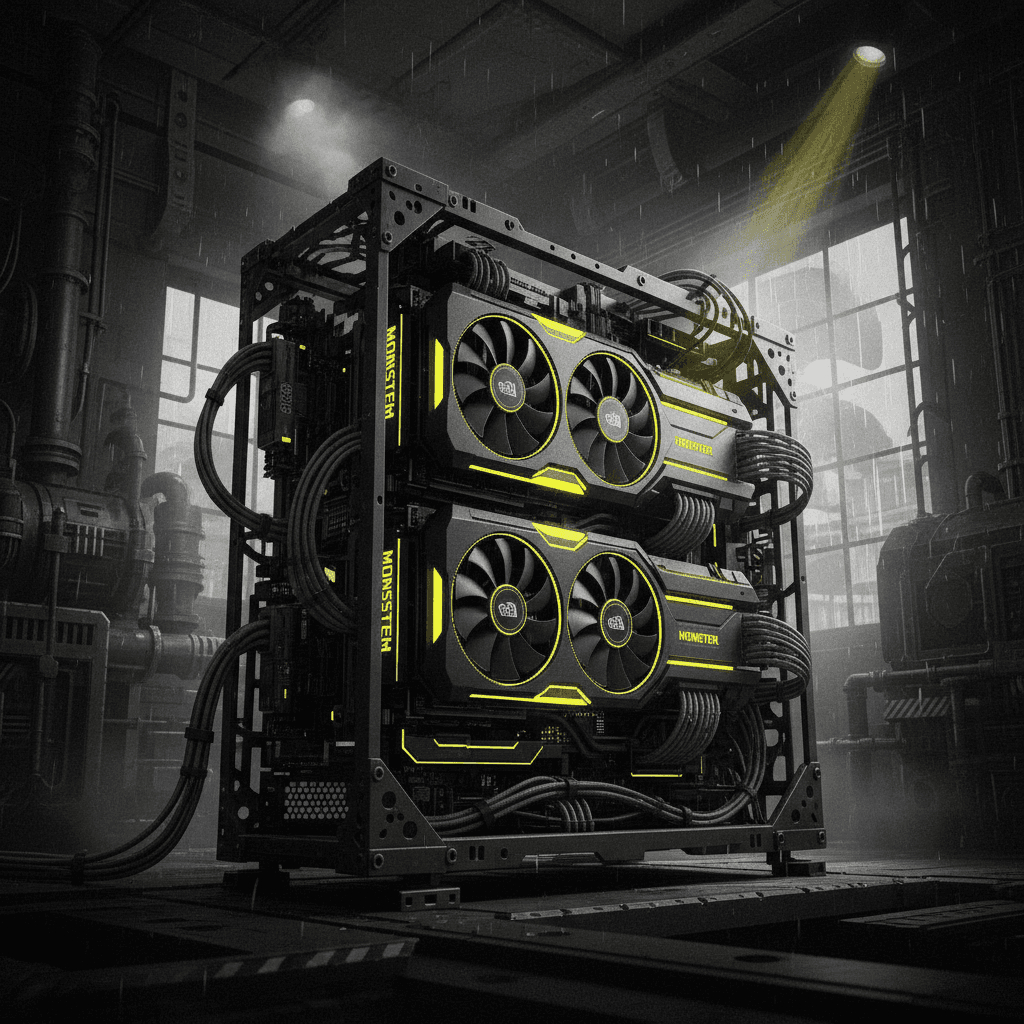

The God Rig (2x 3090)

Join the Vibe Coder Resistance

Get the "Agentic AI Starter Kit" and weekly anti-hype patterns delivered to your inbox.

Join the Vibe Coder Resistance

Get the "Agentic AI Starter Kit" and weekly anti-hype patterns delivered to your inbox.

Join the Vibe Coder Resistance

Get the "Agentic AI Starter Kit" and weekly anti-hype patterns delivered to your inbox.

The "VRAM" Quiz

Think you understand memory bandwidth?

Building the Beast

You need a motherboard with widely spaced PCIe slots. You need a 1200W PSU. You need NVLink (if you can find it, though not strictly necessary for inference).

But when you fire it up? And you talk to a 70B parameter intelligence that lives entirely in your basement?

It feels like owning a nuclear weapon.

USE ARROW KEYS

Conclusion

Don't buy the shiny new thing. Buy the used enterprise gear.

VRAM is the only currency in the AI wars. Hoard it.

Build Your Own Agentic AI?

Don't get left behind in the 2025 AI revolution. Join 15,000+ developers getting weekly code patterns.